Underworld 2.11 Scaling

How does Underworld scale on a HPC? In this post we showcase how Underworld 2.11 scales across two of Australia's premiere HPC systems.

The reference model chosen for this scaling showcase is a extended 3D stokes flow: Analytic Solution SolDB3D . Q1P0 elements were used and a fixed solver iteration count for solving the saddle point problem. We extended this model adding extra routines (swarm advection and the advection-diffusion equation solver) to capture all the main algorithms used in a typical thermo-mechanical model by Underworld.

The results are split into Strong and Weak scaling results.

- Strong scaling varying the number of CPUs for a given model resolution.

- Weak scaling varying both the number of CPUs and model resolution to keep the amount of work per core constant - investigating the parallel efficiency of the algorithms.

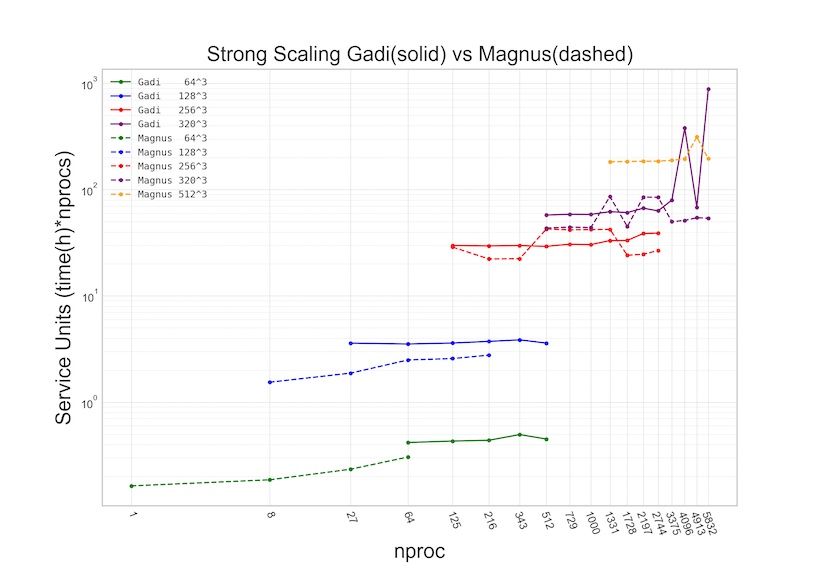

Strong scaling

The following graph shows various resolution runs performed over a range of CPU numbers (nproc). The y-axis is measured in service units to capture the cost of using the compute resources.

For ideal scaling one would expect families of flat lines as doubling the amount of CPUs should result in halving the runtime; yielding a constant Service Unit cost.

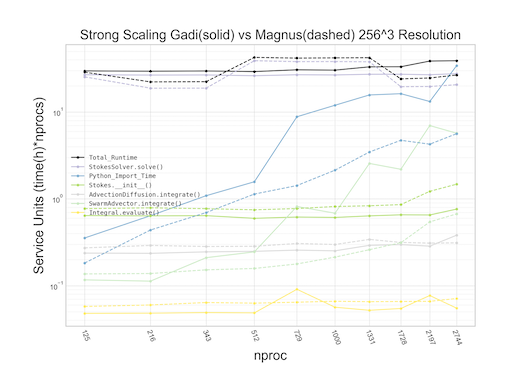

The following graph shows an function break down of a single sized model, 256^3 number of elements.

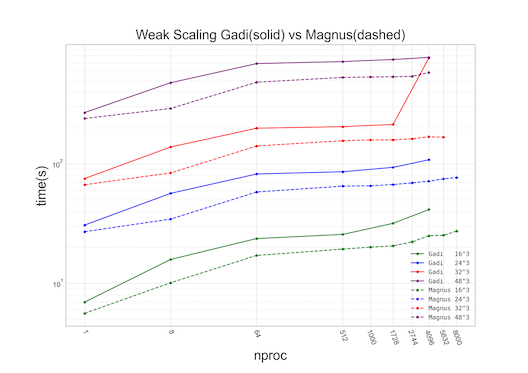

Weak scaling

Weak scaling plots shows the effect of running the same amount of work (elements count in the legend) per CPU constant but increasing the number of processor CPUs used. This investigates the parallel efficiency of the code.

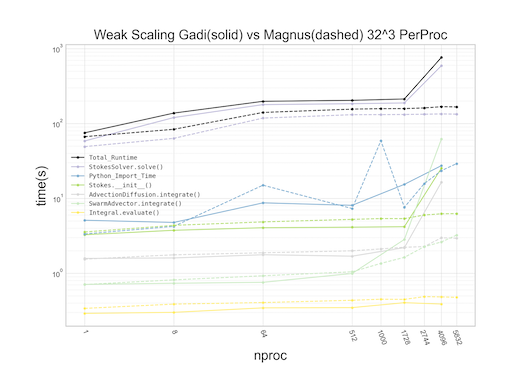

The following is a function break down of the weak scaling results for a 32^3 element per CPU model.

Unfortunately, we were only able to run these scaling models once (sometimes twice) due to low time allocation of the 2 HPC facilities. Ideally, we would repeat each model configuration at least 3 times and take an average model time to generate more statistically sound results.

We hope our Underworld user community find these results useful for understanding what one can expect when executing Underworld on a HPC facilities.

For anyone wanting to reproduce these results all scripts used to run and analyse the results are stored here.

Finally we thank the following supporting project/support schemes:

- Project m18: Moresi, L. Instabilities in the convecting mantle and lithosphere.

- Project q97: Mueller, D. Geodynamics and evolution of sedimentary systems.

- Sydney Informatics Hub HPC Allocation Scheme, which is supported by the Deputy Vice-Chancellor (Research), University of Sydney and the ARC LIEF, 2019: Smith, Muller, Thornber et al., Sustaining and strengthening merit-based access to National Computational Infrastructure (LE190100021).

Comments ()